This blog post describes the first phase of Usage Centered Design - modeling users and their tasks. A later post will describe using them to design a user interface.

Why model users?

The first key insight to understand is that we're not actually designing for users. We're designing to let people do things. This raises two important questions:

- How can we best describe the aspects of the people and how they need to interact?

- How can we best describe the things that they have to do?

- http://chopsticker.com/2007/06/08/download-an-example-persona-used-in-the-design-of-a-web-application/

- http://www.uiaccess.com/accessucd/scenarios_eg.html

How can we model users?

This is really two questions.

- What relationship between a user and a system do we want to model?

- What information about that relationship do we want to capture?

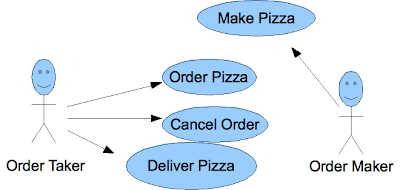

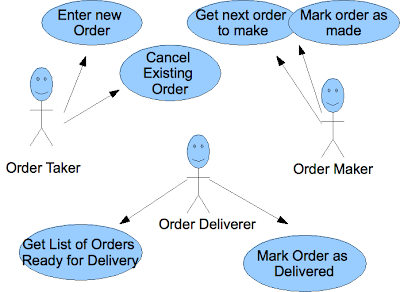

For example, in a Pizza company we can model the users by creating several different roles:

- Telephone Answerer

- Order Maker

- Order Deliverer

- Staff Roster Maintainer

- etc

In addition, we keep some additional information about each role. This can include:

- The context of use (front of shop, potential interruption by customers)

- Characteristics of use (customers have a tendancy to change their mind)

- Criteria (speed, simplicity, accuracy)

How can we model the things that users do?

Essential Use Cases are used in Usage Centered design to model the things that users do. Each use case describes a particular task a user has to do with the system (or a goal they want to achieve with the system).

Essential Use Cases are just like ordinary use cases except:

- When writing them we have an unholy focus on the minimal, essential interaction that is necessary for the user to achieve their goal. We assume that this use case is all that the user will be using the system for - resolving the navigation between different use cases is done later.

- They're written in a 2-column format in order to visualize the necessary interaction.

- We write the use case from the user's perspective - the interactions that they want to have first are first in the use case. If they don't care about order then we only have 1 step.

In contrast, Cooper's methodology models how a particular user might actually achieve a particular goal - with all the contextual information in there.

Aren't these typical Business Analysis artifacts?

Yes, the models used in Usage Centered Design are models that Business Analysts use on a daily basis. The difference is in how the models are used. In Usage Centered Design, the analyst has to have a constant focus on the user and how they're likely to use the system. This might involve a serious amount of user research if the tasks and how they're achieved is ambiguous (or, in a Pizza company, it might not :).

I've found that it's pretty easy to explain the focus of a use case and a user role map to business stakeholders. I've also found that once they've "got it" the type of information I'm getting from them changes - we start talking about requirements instead of solutions!

That's all good, but how do we use them?

Stay tuned for the next update: Usage Centered Design - using the models.